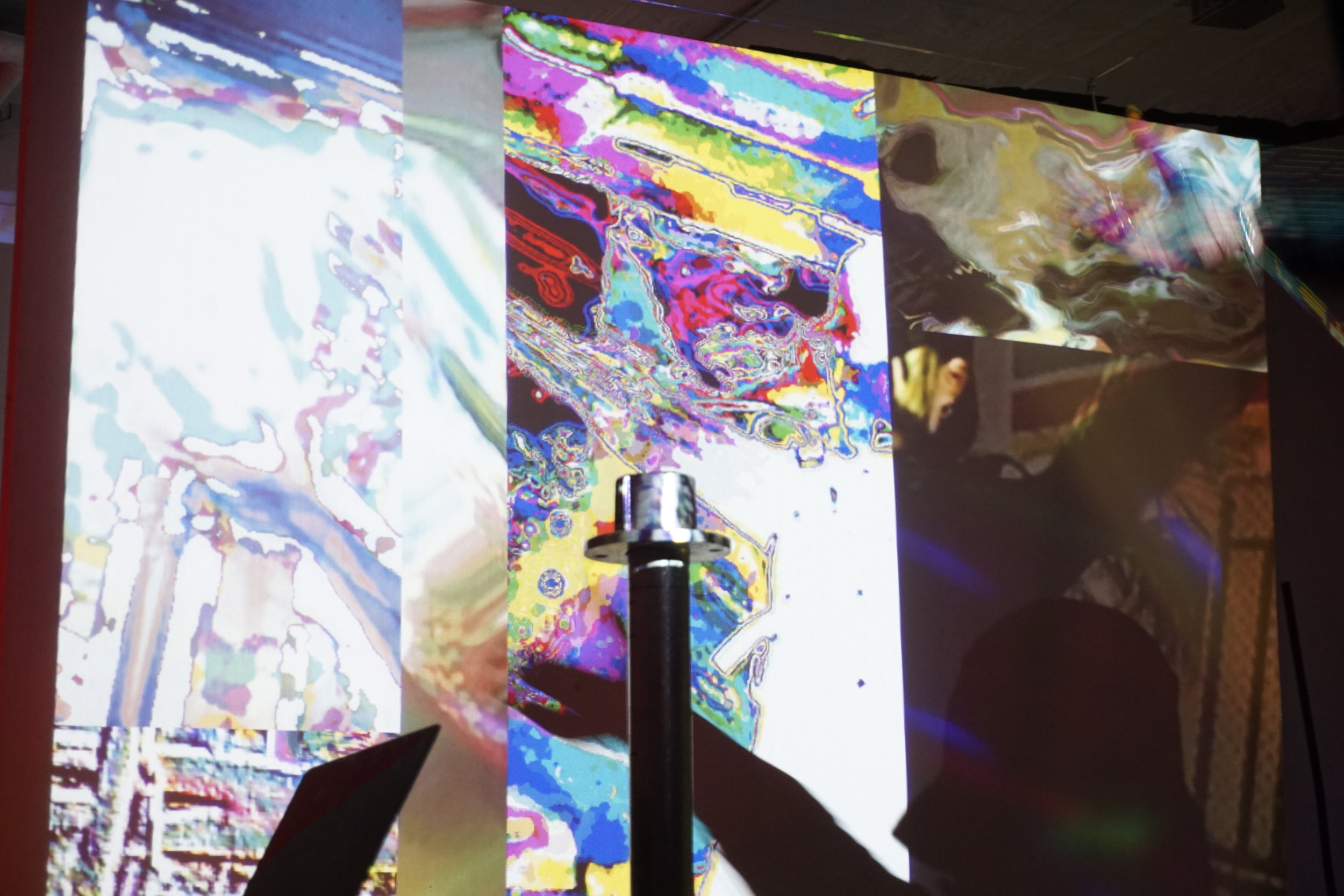

It was a pleasure performing this piece, called AI-generated voice that learned to sound like me reading every comment on 2-hours of Moonlight Sonata on Youtube, at venue called Baby Castles in New York in 2017.

\

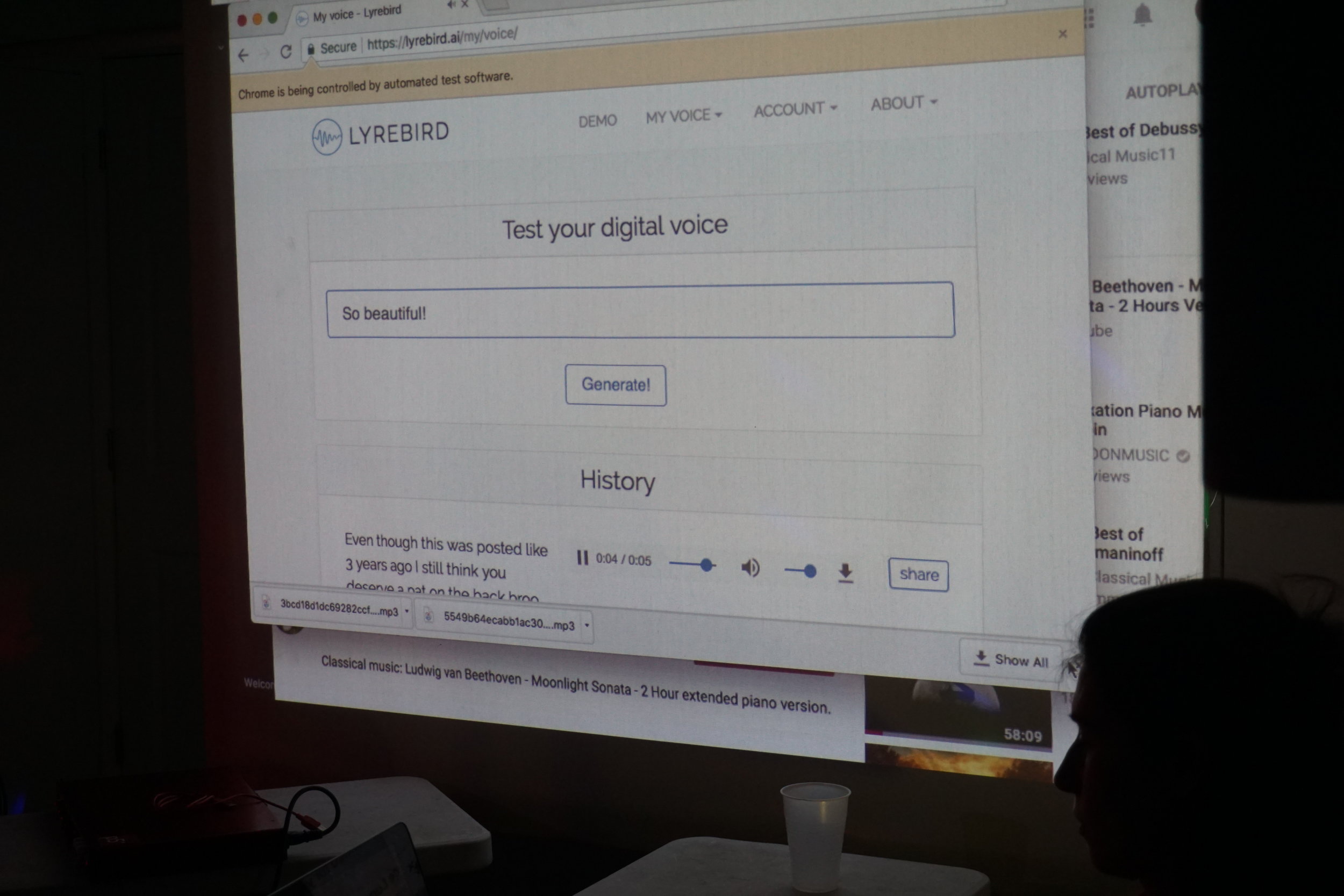

In this project I scraped Youtube comments from a 2-hour looped version of the first part of the Moonlight Sonata and placed them into a JSON file. I then used Selenium, a Python library, to write a script that uploads the comments from a JSON file into Lyrabird, which reads the comment out-loud in my own AI-generated voice. I had previously trained Lyrabird to sound like like me, which adds to the unsettling nature of the project. I based my Selenium code off of the code that Aaron Montoya-Moraga's wrote for his automated emails project.

The code for this project can be found on github

AI-generated voice that learned to sound like me reading every comment on 2-hours of Moonlight Sonata on Youtube explores themes of loneliness, banality, and anonymity on the web. The comments read out loud give voice to those who comment on this video. The resulting portrait ranges from banal to uncomfortable to extremely personal.

The piece is meant to be performed live.

___

CAPITAL VOL 1 Reading

This is a separate but similar project that also uses Selenium.

For Capital Volume 1 I had Lyrabird simply read its Amazon reviews one by one. I'm interested in exploring online communities and how they use products, art, or music as a jumping off point for further discussion and forums for expressing their feelings and views. Often people say online things they cannot say anywhere else and it's an interesting way to examine how people view themselves and their environment.

The piece is also meant to be performed live.