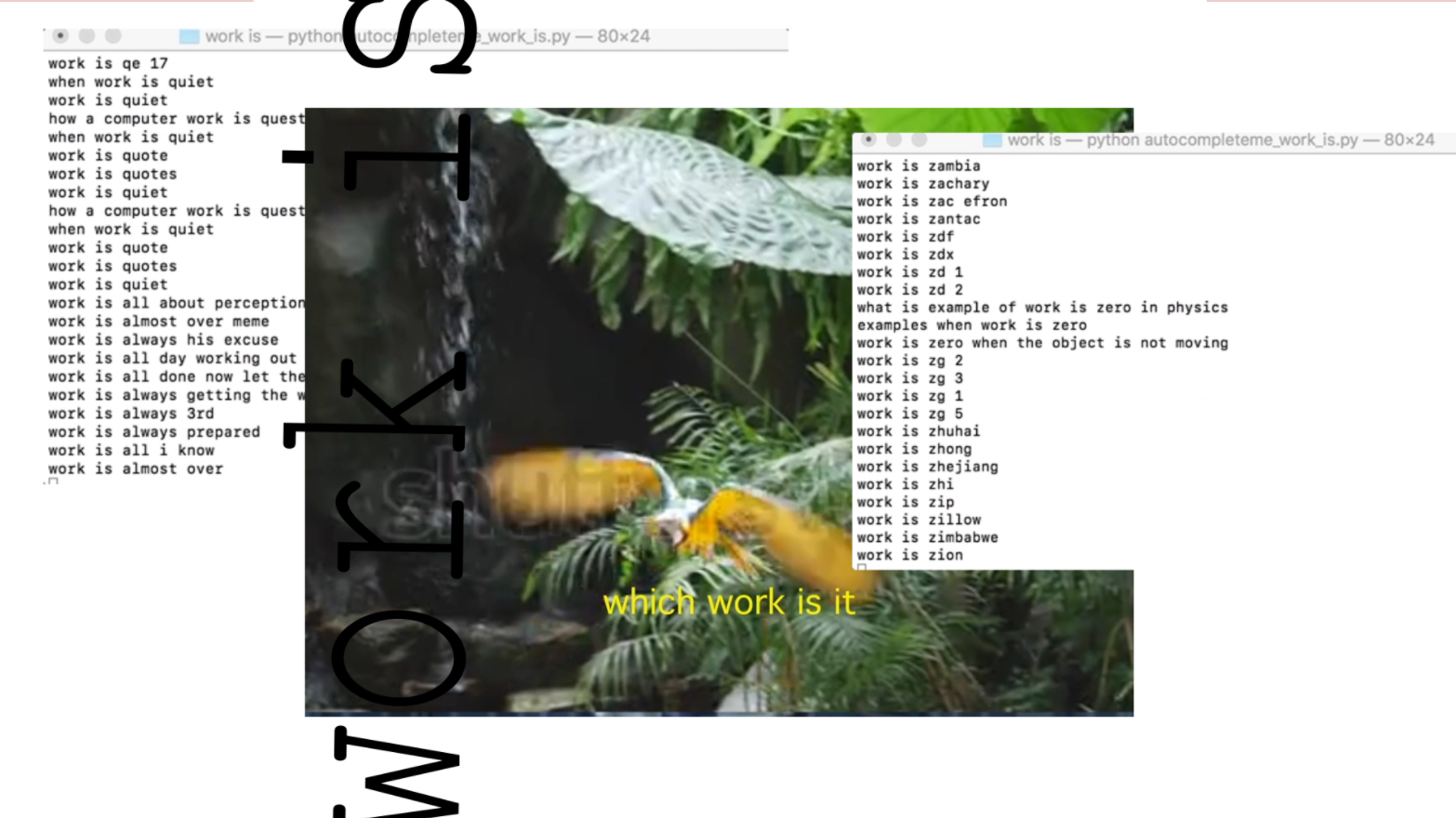

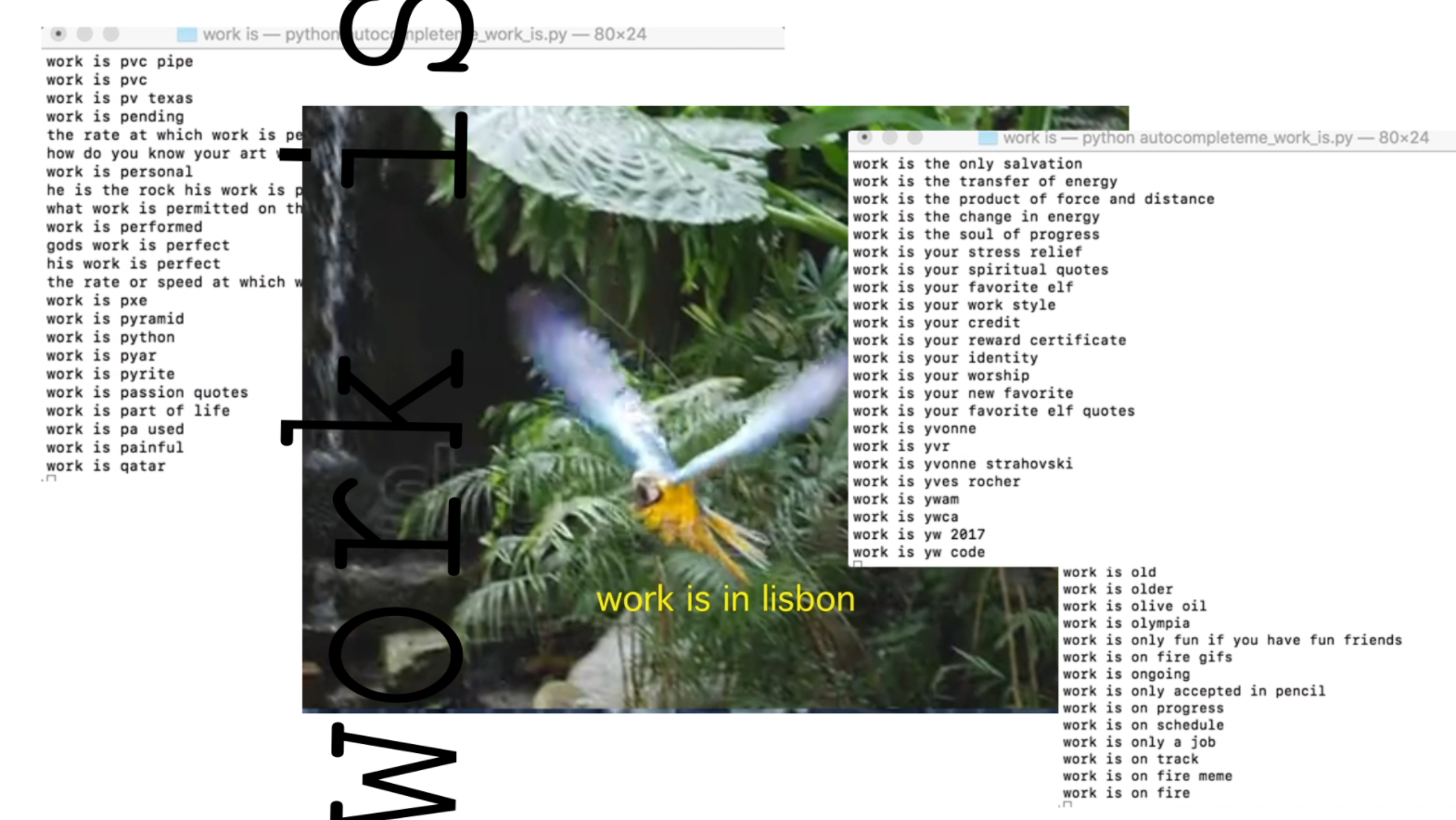

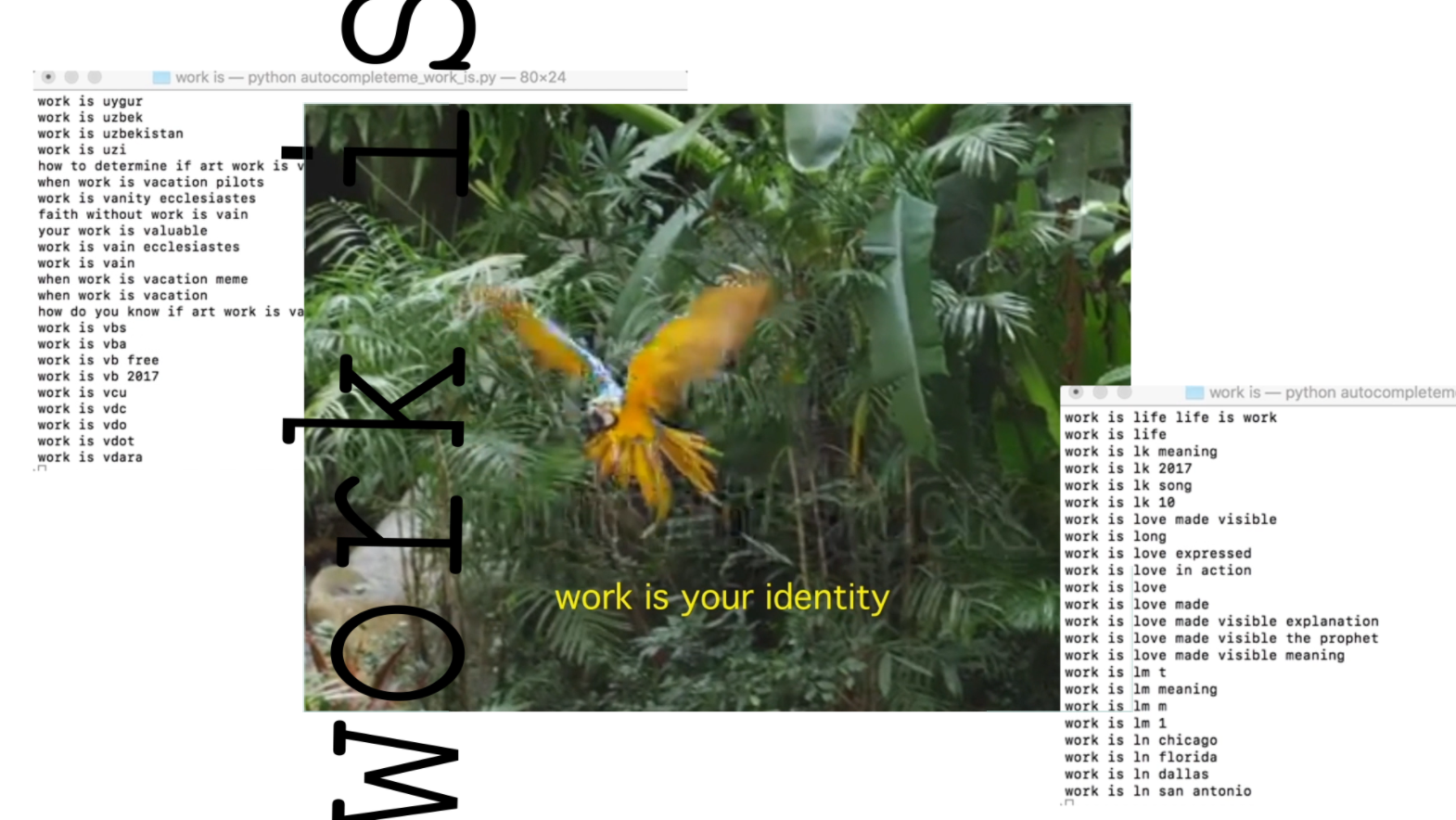

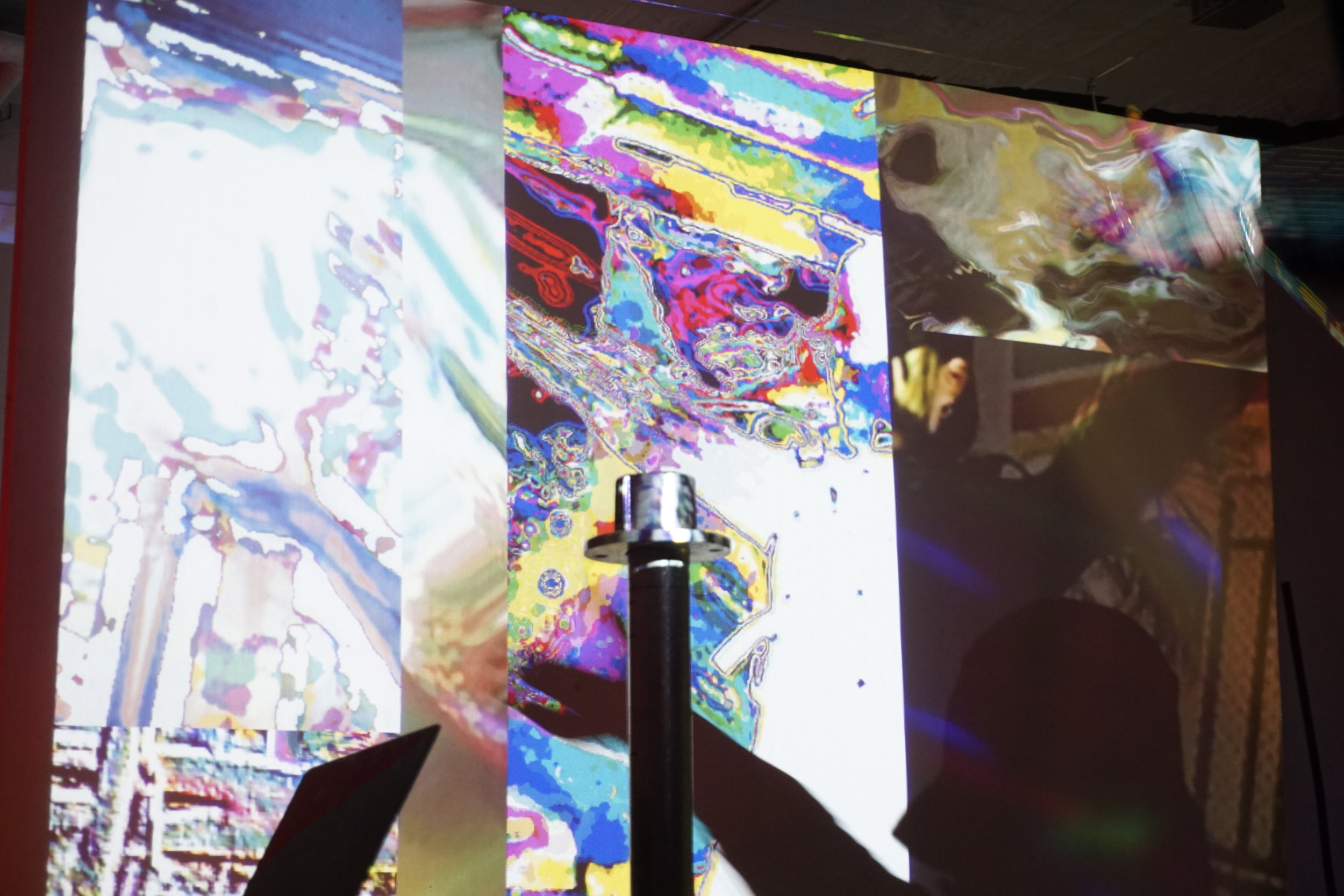

Sitting between video essay and exhibition, this work peruses various ways that people hide themselves and objects for various ends.

Opacity can be unsettling or frightening, whether it is something sinister like soldiers in military camouflage waiting to ambush someone or just unsettling like a mysterious object wrapped in something opaque. On the other hand it is highly sought after for safety.

To complicate things, it can also be quite (often darkly) humorous that people attempt to hide with various degrees of success, attempting to merge with bushes and the like. Monty Python's How Not to Be Seen (from Flying Circus Season 2, 1970) and Hito Steyrl's HOW NOT TO BE SEEN: A FUCKING DIDACTIC EDUCATIONAL .MOV FILE, 2013 are inspirations.

Some of the questions the work attempts to asks are: who gets to suffer being watched (monitored, targeted) and, conversely, who gets to be seen (in full complexity and safety)? Who gets to decide what is hidden and what isn’t? Playing on the desire to camouflage I nod toward ever being’s desire for or right to opacity, inspired by Éduard Glissant’s ontology of relation (‘On Opacity’, Poetics of Relation, 1998), which is where the title comes from, and other artists who work with ideas around both hiding and revealing the identity (Nick Cave’s sound suits, for example) as a way to put forth a more complex view of each other.

Video uses free 3d objects found in Blender resources.

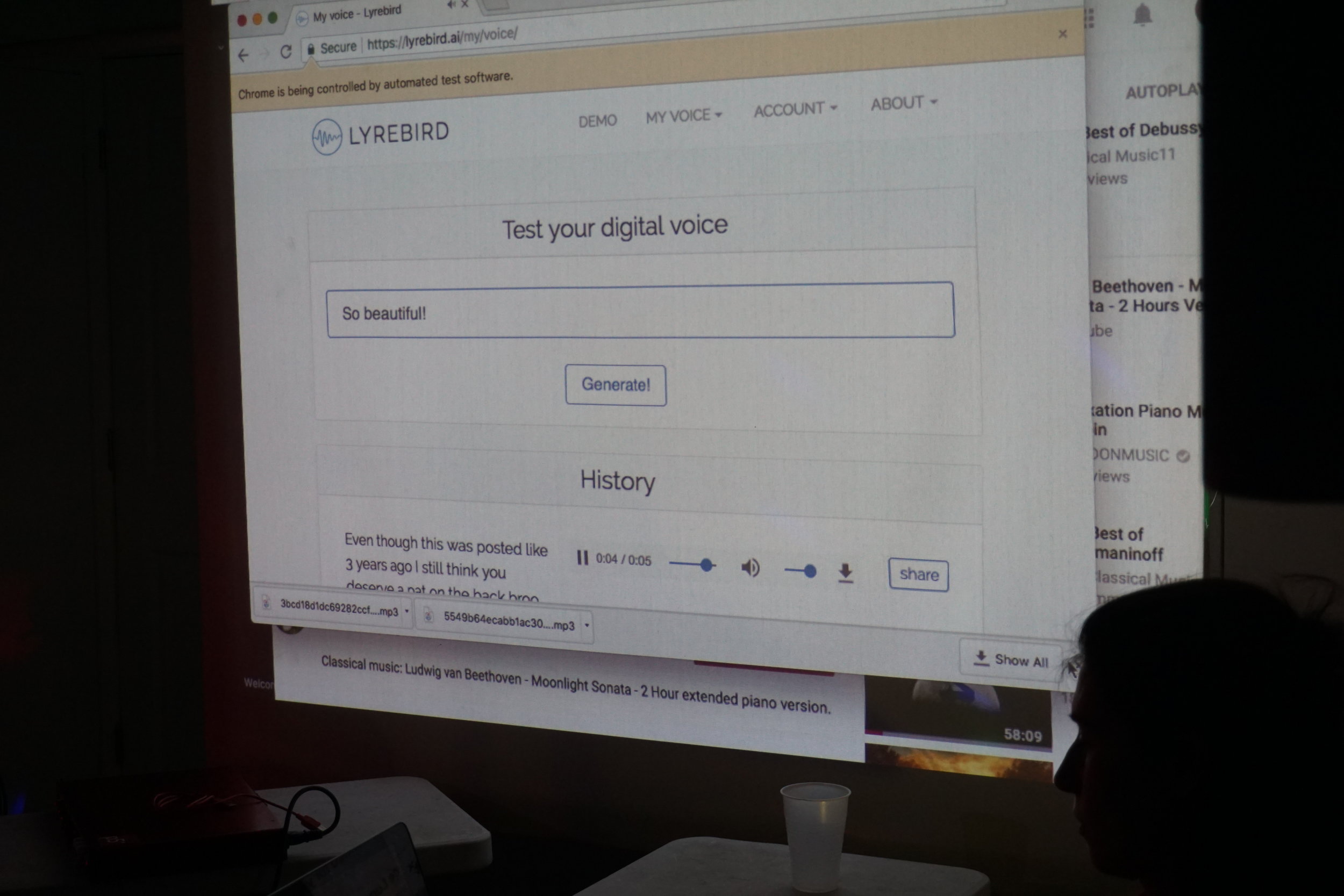

Sounds I created using samples:

Granular synthesis of Tales of Hoffman Barcarole. (Cuent - KATHRYN MEISLE and MARIE TIFFANY) from the Internet Archive

Granular synthesis of hippos found on FreeSound.org