Proposal for Code of Music Final

I’m creating a banana rhythm instrument. I will use this banana shape I am making a plaster mold of (if successful). I think it will be then cast in paper or cement or concrete if i get the sand on time. Below are some shapes, banana rocking gif, and some light documentation of the MaxMSP process I made over Thanksgiving break.

Sounds themselves

To create the sounds I will use Tone.js and somehow feed it into MaxMSP via bluetooth modules (i have yet to figure out how to make it work). Or maybe I can use raspberry pi to run Tone.js in the p5 program in the browser and just connect it to speakers? Or! Just put an qcferoneter inside the banana shape and have a wire to an Arduino and that will be plugged into the laptop running P5! I wish this was a wireless banana but I am not sure if I will be able to make it wireless (I'd need a very small Arduino) and then I’d just need to have my own router i guess? Would love to hear some ideas from Luisa and the class how I could make this work best. Thank you!

Audience would be a person or a group of people who are looking to have some tactile and sonic therapeutic meditative experiences. Some time-out! Also I see potential for this in waiting rooms in doctors’ offices to relieve stress. It’s inspired by Sound Arcade show (December 2017) from the Museum of Design and Art.

Inspirations are MICRO DoubeHelix and Sonic Arcade artists at the Museum of Design and Art, especially Liouse Foo and Martha Skou (FooSkou!)

Experimenting with recognizable objects that can rock back and forth. Sort of a boat-like movement. See-saw. Will use the plaster mold to cast in paper.

Once it turns to paper

Interactions

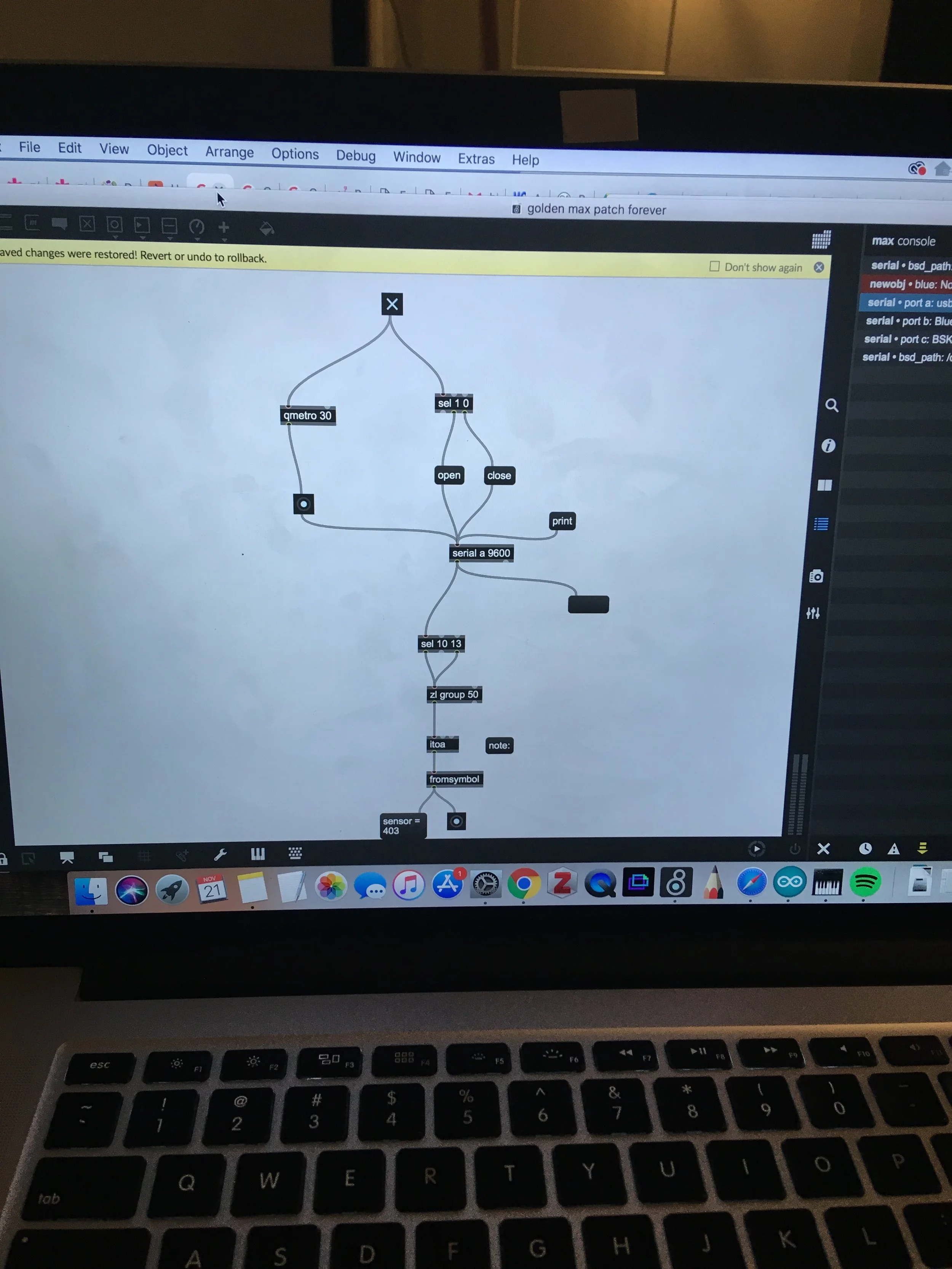

i was able to get an accelerometer to modulate sounds in Max MSP thanks to Dom’s help (and Aaron’s help last year, it’s all staring to make sense now) and I hope I can get that to work in p5. We were also able to get the Max MSP to send different audio outs to separate Bluetooth speakers, which is great news because this means that i can have one program (max) control all of the “instruments” and have them interact sonically with one another fairly easily (without needn’t yo set up a network of raspberry pis).

Setting up serial receiving arguments! Assigning different instruments to different audio outs.

Some interactions I considered before landing on banana boat shape (I may use them in addition to the banana if there is time but I want to keep the project manageable):

I plan to paint the banana shape (and others if I have time for other shapes) and even possibly use Bondo (plaster) and sand itto make it look more “finished”.

Harmony + Ping Pong Delay

I created a chord progression based on one that I generated using chordchord.com

The purpose of this exercise was to learn about harmony. I used Tone.js to create a Polysynth and a Part, which is basically a sequence that can be looped over and over again. The composition is very simple but the Ping Pong Delay adds a little charm and complexity to it. I used the collage I created a while back as a cover photo for this to pay homage to the lovely Ping Pong Delay I discovered on the Tone.js site after Max Horwich pointed me in this direction.

Collage by me, chord progression fromhttps://chordchord.com/

Questions for Luisa Pereira in office hours:

I am still struggling with creating visuals that are triggered by Tone.js events. How can I do that? I tried it like this but there was no way to time it so that it only shows up while the chord it playing. I think i’m heading in the right direction but not sure what the solution is here.

if (chord = Eb_chord){// ellipse(12,12,12,12);// }Why does the ping pong ellipse i am drawing behave differently when i remove the photo from the sketch? https://editor.p5js.org/katya/sketches/HyXIzjGn7

in the image below what does iv, iii, etc mean?

Rolly Polly sound modulator

For the Code of Music class i'd like to create a Rolly Poly that modulates sound. It will use accelerometer data to trigger sonic changes. Oh and i'm gonna want to add the time function to this so that it plays different things depending on the time of day or at least plays a different pitch. Turns out p5js has time! Max just told me this and it blew my mind.

Video and Sound speeding up and slowing down

For Code of Music class I created a video and sound player in P5js that allows you to speed up and slow down a video along with it’s sound. The video and sound mix is something I recorded and created this summer as part of a series I created using Processing to learn how to manipulate video with sound. The chord is from freesound user named Polo25. I couldn’t get a clean video recording.

Since Tone.js appears to not have a video library, I used P5js. Instead of Tone’s playbackRate I used P5’s function called speed and tried to map it to mouse position but it did not work. I was able to create buttons that allow the speeding up and slowing down, however.

function vidLoad() { // ele.play(1); //trying to use a gradual playback rate ele.speed = (map(mouseX, 0, width, 0.1, 1));}//maybe it has to be in draw?function draw(){ ele.playbackRate = (map(mouseX, 0, width, 0.1, 1));Turns out you cannot map speed. I got the following error:

Uncaught TypeError: ele.speed is not a function (sketch: line 61)Raspberry pi projects for making media making devices

Here is the link we used to set up

https://projects.raspberrypi.org/en/projects/raspberry-pi-setting-up/5

Project 1: Stop motion project

Tutorial used https://projects.raspberrypi.org/en/projects/push-button-stop-motion/8

For this one we got to the part where we were able to take photos of all the drawings we made using the camera connected to raspberry pi. However we ran into two issues

1. The images came out very blurry. We read online that this happes when you take photos of something so close up. We decided to take the photos with our phones and edit them with Photoshop and use those instead.

This worked fine:

camera.start_preview()

frame = 1

while True:

try:

button.wait_for_press()

camera.capture('/home/pi/animation/frame%03d.jpg' % frame)

frame += 1

except KeyboardInterrupt:

camera.stop_preview()

break2. When it came time to stitching the photos together the command line diff not produce a video output. We hope to ask the instructor in class for help on this.

The line below did not work at all but there was no error message. We could not locate the file animation.h264 however.

avconv -r 10 -i animation/frame%03d.jpg -qscale 2 animation.h264Code

We got the motion sensor to work but the code for figuring out how to start the recording.

from picamera import PiCamerafrom gpiozero import MotionSensorpir = MotionSensor(4) camera = PiCamera()camera.capture(‘home/pi/Desktop/pics.png’) while True: pir.wait_for_motion() print(“Motion detected”) camera.start_preview() pir.wait_for_no_motion() camera.stop_preview()

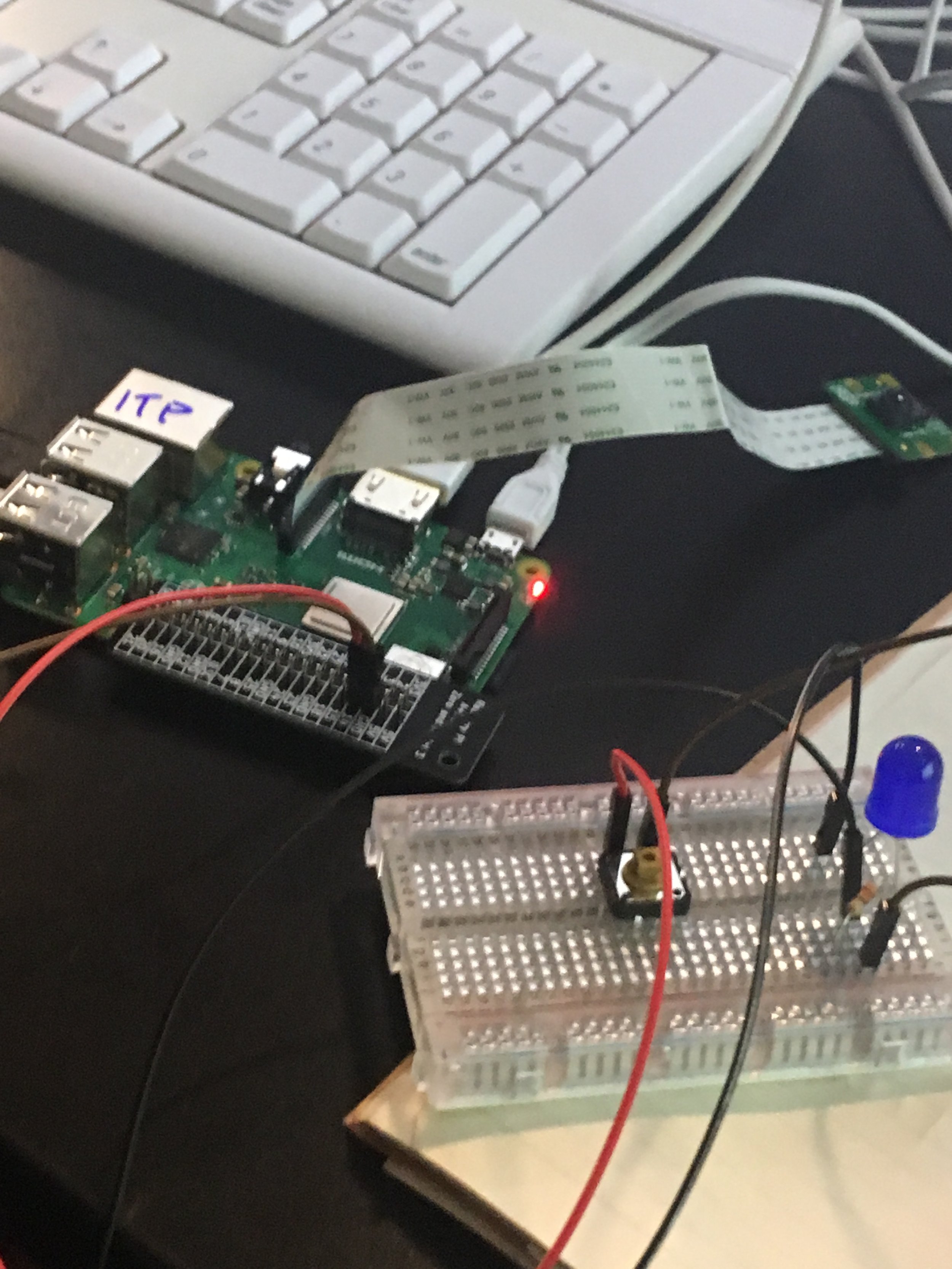

Anita and I and Jasmine who stopped by:

The Sound of a :)

I call this piece the sound of a smile. I know this won’t be a seminal work that changes the course of music composition but I think it’s the best i can do as a beginner. I tweaked code from class (a sequence) and used a MonoSynth to play it. I used P5js and Tone.js libraries.

sketch link

The things I learned are:

What the notes “C4” and “F2” etc. mean. I was able to determine by listening to the notes laid out in order which one was which. Then I looked this up and found https://www.becomesingers.com/vocal-range/vocal-range-chart

I referenced it to understand better what sounds are within normal range.

I wanted to see if i can replace the notes with just frequencies and it sounded like garbage so I decided to go back to the more musical version of this sketch and changed the sound of the envelope a bit. I also tried replacing the MonoSyth with PolySynth and PluckSynth but didn’t like the sound as much. I decided play this sketch starting out with a smiley face and turning it into something more abstract. The end of the sketch actually starts to sound kind of interesting.

See it here on video:

Making Media Makind Devices documentation

Setting up raspberry Pi

Exercise 1:

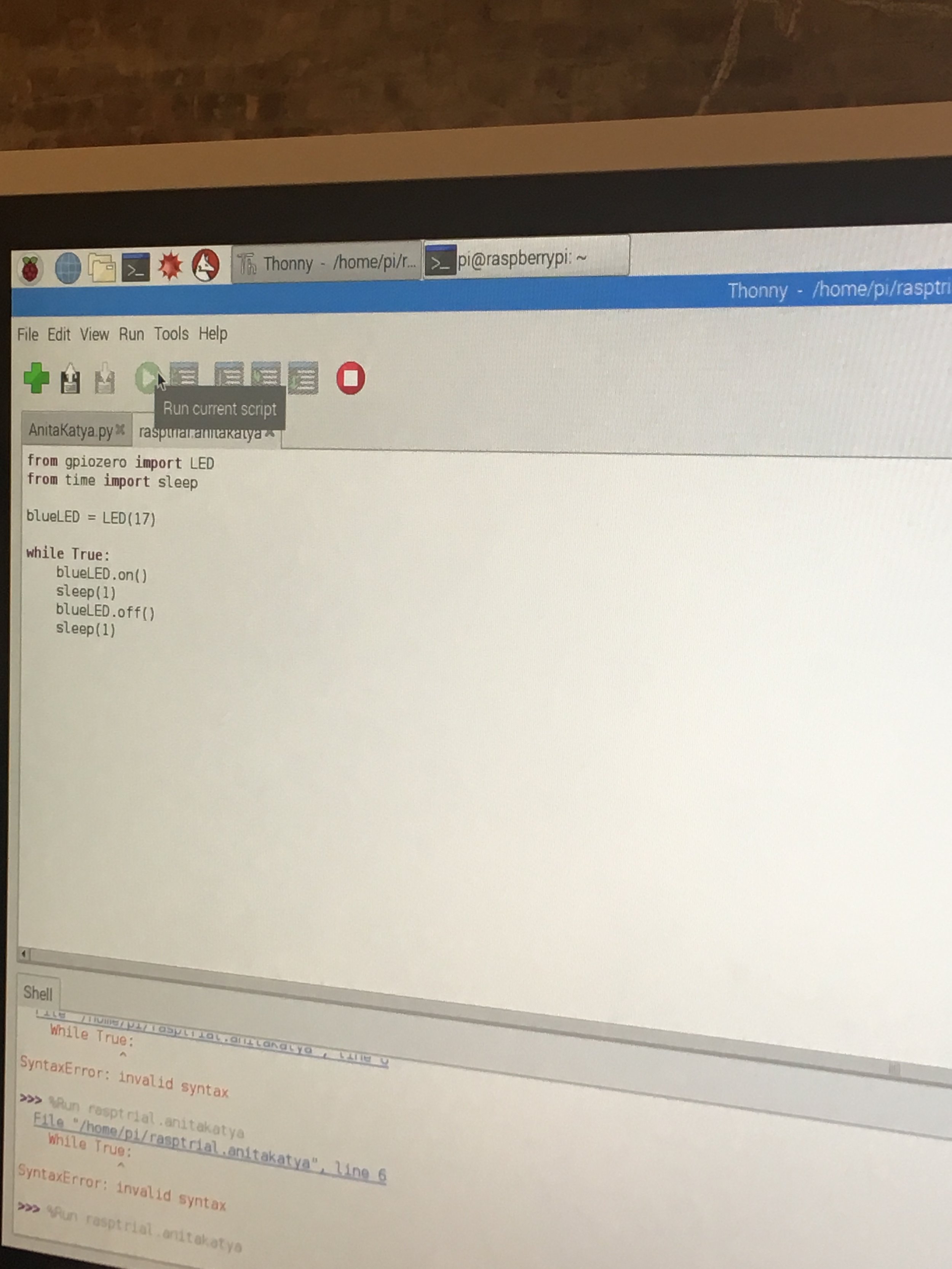

Exercise 2

Exercise 3

Getting rid of while loop. Now we go into an event driven stuff.

Used a button to turn something on.

Video

Anita and I did it!

Recording

Two portraits

A portrait is one i snuck at the library. I liked the yellow repetition.

Nicolas from class. This friend smiles a lot. He's got a great outlook on life.

Week 3: Code of music assignment

Here is this sketch

Couldn’t record a composition using Quicktime for some reason. Maybe something wrong with the mic.

For this assignment I created a composition based on one of the examples from class. I used the random function too play the notes that I specified in an array. I remember I used numbers instead of notes (for example just 12 instead of "C2”) in my previous simple compositions using tone.js and I liked the effect of having very low notes. I think that the low notes are only audible when they are put through some sort of filter so in this case, these frequencies were simply not played.

Week 2 Rhythm assignment

Here is the sketch with some rhythm explorations. I edited the sketch from class. Still a little new to this so this is a pretty simple iteration but along the aesthetic i would like to create for the final project.

Not sure how to connect the actual sound to the visuals yet. i imagine it's some dor of audio mapping to variables that can be used as parameters in shapes. Will try to do this this week. Perhaps this will be clarified in class.

I’d like to learn how to create objects out of small lines that budge a little in reaction to volume and rhythm changes.Some of the lines could belong and respond to one instrument and the other lines could represent the other instrument, etc. If there are three instruments, like in this pice, there will be three groups of lines.

Thoughts on Infinite Drum Machine and Groove Pizza

Infinite Drum Machine gives me life.

It’s really inspiring. It’s very similar to the sound project that I’d like to do for my final project. I enjoy the calming sounds of staccato and all kinds of beats that don’t have a lot of reverb. It sounds like people are just sitting in a room banging on items and without any special effects. Meditative and very playful. I like the intersection of music and sound art. Since I’m not musical i use sounds more like sculptural elements and they tend to be secondary to my visual work, so it’s nice to see examples like this that feel less intimidating to me. I think complex musical compositions are great but I am not someone who can create them, so i can admire them and listen to them but will have to built something pretty minimal for my final. Definitely will take inspiration from this!

Groove Pizza is the most confusing interface I’ve ever seen. It’s really fun to use but i have no idea what anything does. I guess it’s good that it’s so “discoverable” but i am not sure i’ll ever make sense of it without labels. Maybe music experts do find it intuitive.

Code of Music - 1

For our first assignment for Luisa Pereira’s Code of Music we created simple sequencers using recorded sounds. I found all of the sounds I wanted to use on Freesound.org.

https://editor.p5js.org/katya/sketches/S12IWdnuQ

I used many sounds from freesound.org

The main one i used was this tuning of string instruments.

https://freesound.org/people/luisvb/sounds/329925/

https://freesound.org/people/Robinhood76/sounds/171446/

https://freesound.org/people/SamsterBirdies/sounds/345043/

this is a nice inspiration though i wanted something more chaotic.

Enclosure in the making

Copy of Sound modulating slit scan location via processing

Everyone is doing slit-scan things these days and I decided to apply the effect on something that looks like a slit-scan already. Here is an homage to my favorite horizontals and colors at Bedford Nostrand. The station is often empty and the practically uninterrupted horizontals make for a soothing wait. They also mimic the blur of the train. The saturated industrial yellows next to tempered forest greens remind me of rain boots and camping gear.

Using processing to modulate where the digital slit-scan is sampled in a static image that I turned into a video. So far, I am simulating sound input with the mouse. The next steps is to use actual sound input to modulate the location. Inspired by recent work of @lkkchung, @loquepasa, @sofialuisa_ , and Spike Jonze. A bit of coding guidance from Koji Kanao (@array_ideas). I’m sort of cheating because I’m using the mouse to approximate the sonic changes. The next step is to actually use sonic input to trigger the visual changes. I’ll try to get to it this summer.

code:

/**

* Transform: Slit-Scan

* from Form+Code in Design, Art, and Architecture

* by Casey Reas, Chandler McWilliams, and LUST

* Princeton Architectural Press, 2010

* ISBN 9781568989372

*

* This code was written for Processing 1.2+

* Get Processing at http://www.processing.org/download

*/

import processing.video.*;

int cols;

int rows;

PImage backgroundimage;

Movie myVideo;

int video_width = 210;

int video_height = 280;

int window_width = 1000;

int window_height = video_height;

int draw_position_x = 0;

boolean newFrame = false;

void setup() {

myVideo = new Movie(this, "small_210_280.mp4");

size(1000, 280);

background(#ffffff);

myVideo.loop();

frameRate(260);

backgroundimage = loadImage("bckg3.jpg");

cols = 160;

rows = 100;

if( backgroundimage.width%width > 0){cols++;}

if( backgroundimage.height%height > 0){rows++;}

for (int y=0; y<rows; y++){

for (int x=0; x<cols; x++){

image(backgroundimage,x*backgroundimage.width,y*backgroundimage.height);

}

}

}

void movieEvent(Movie myMovie) {

myMovie.read();

newFrame = true;

}

void draw() {

int r = floor(random(250));

int video_slice_x = (1+(mouseX));

if (newFrame) {

loadPixels();

for (int y=0; y<window_height; y++){

int setPixelIndex = y*window_width + draw_position_x;

int getPixelIndex = y*video_width + video_slice_x;

pixels[setPixelIndex] = myVideo.pixels[getPixelIndex];

}

updatePixels();

draw_position_x++;

if (draw_position_x >= window_width) {

exit();

}

newFrame = false;

}

}

Copy of Pattern and Sound Series - P5js and Tone.jsl

Using p5js and Tone.js, I created a series of compositions that reconfigure temporarily on input from Kinect, a motion sensor, and generate soothing and energizing synthetic sounds that match the visual elements of the piece. The people in front of the projected visuals and sensor, in effect, create sonic compositions simply by moving, almost like a conductor in front of an orchestra but with more leeway for passive (if one simply walks by) or intentional interaction.

The sound is easy to ignore or pay attention to. It is ambient and subtle.

The next steps are to create more nuanced layers of interactivity that allow for more variation of manipulation of the sound and the visuals. Right now I am envisioning the piece becoming a subtle sound orchestra that one can conduct with various hand movements and locations of the screen.

Composition 1: Ode to Leon's OCD

In the editor.

Composition 2: Allison's Use of Vim Editor

Mouseover version for testing on the computer.

In the editor using Kinect.

Composition 3: Isobel

In the p5js editor.

Composition 4. "Brother"

In the p5js editor.

For this synchopated piece I sampled sounds of tongue cliks and and claps found on freesound.org. No tone.js was used in this piece.

Composition 5. "Free Food (in the Zen Garden)"

For this composition I used brown noise from Tone.js.

In the p5js editor.

After using mouseOver for prototyping, I switched over to Kinect and placed the composition on a big screen to have the user control the pattern with the movement of the right hand instead of the mouse.

Initial Mockups

Sketches of ideas

Inspiration

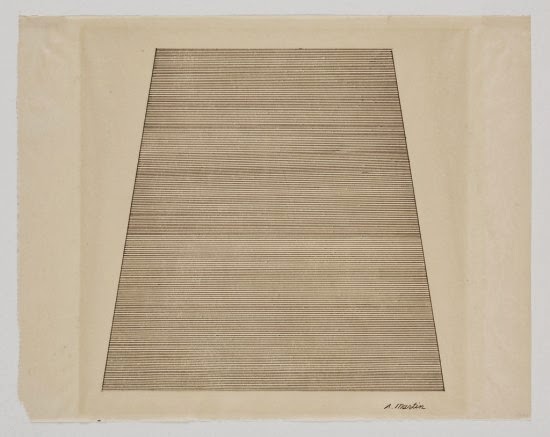

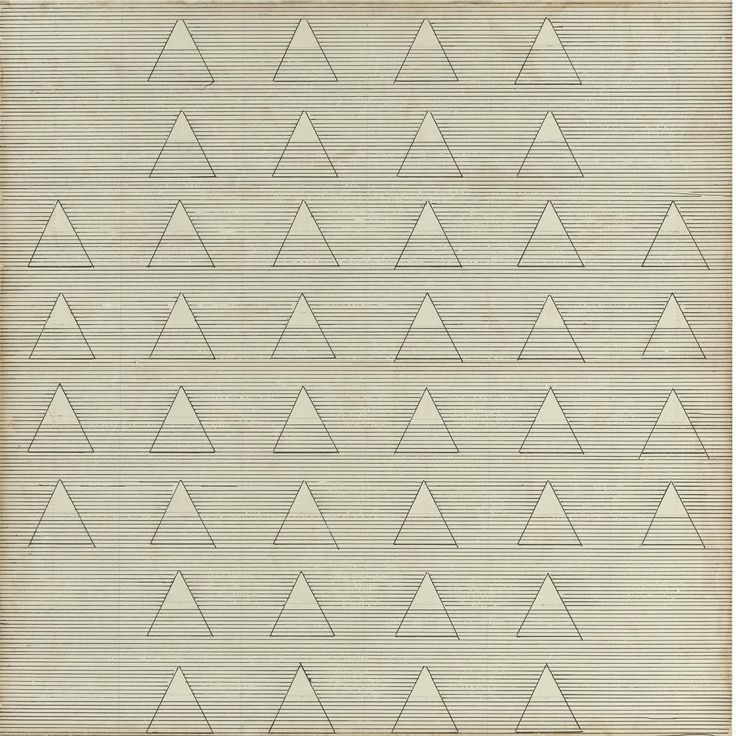

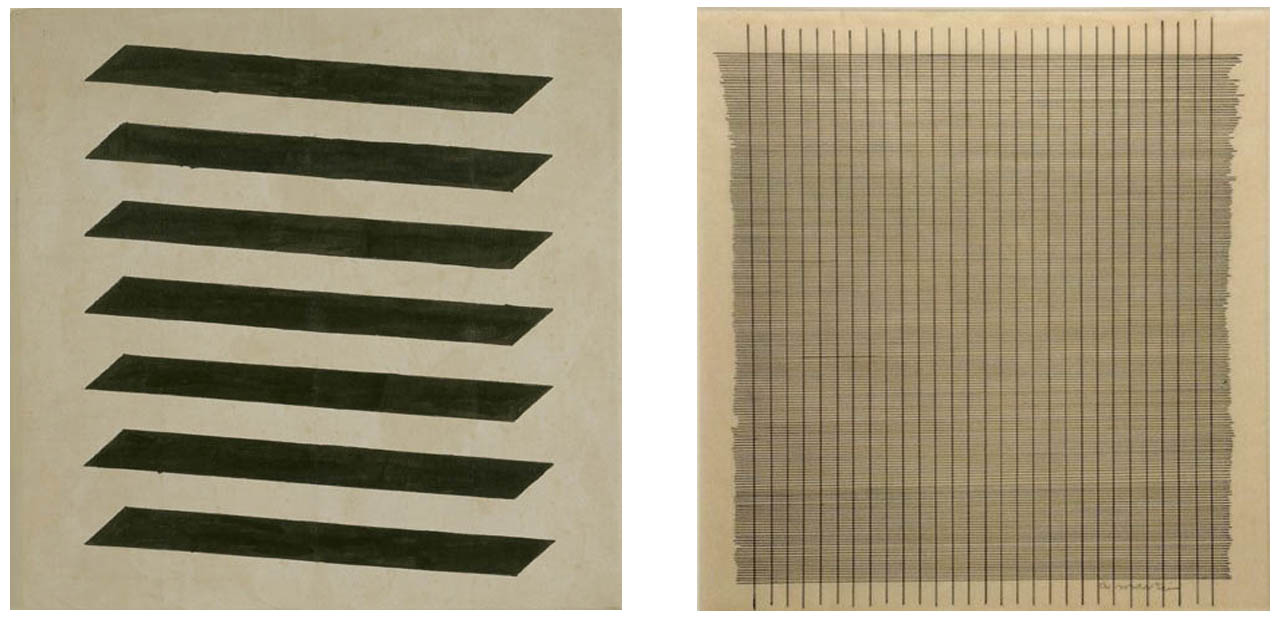

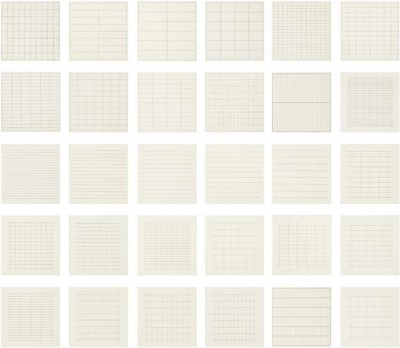

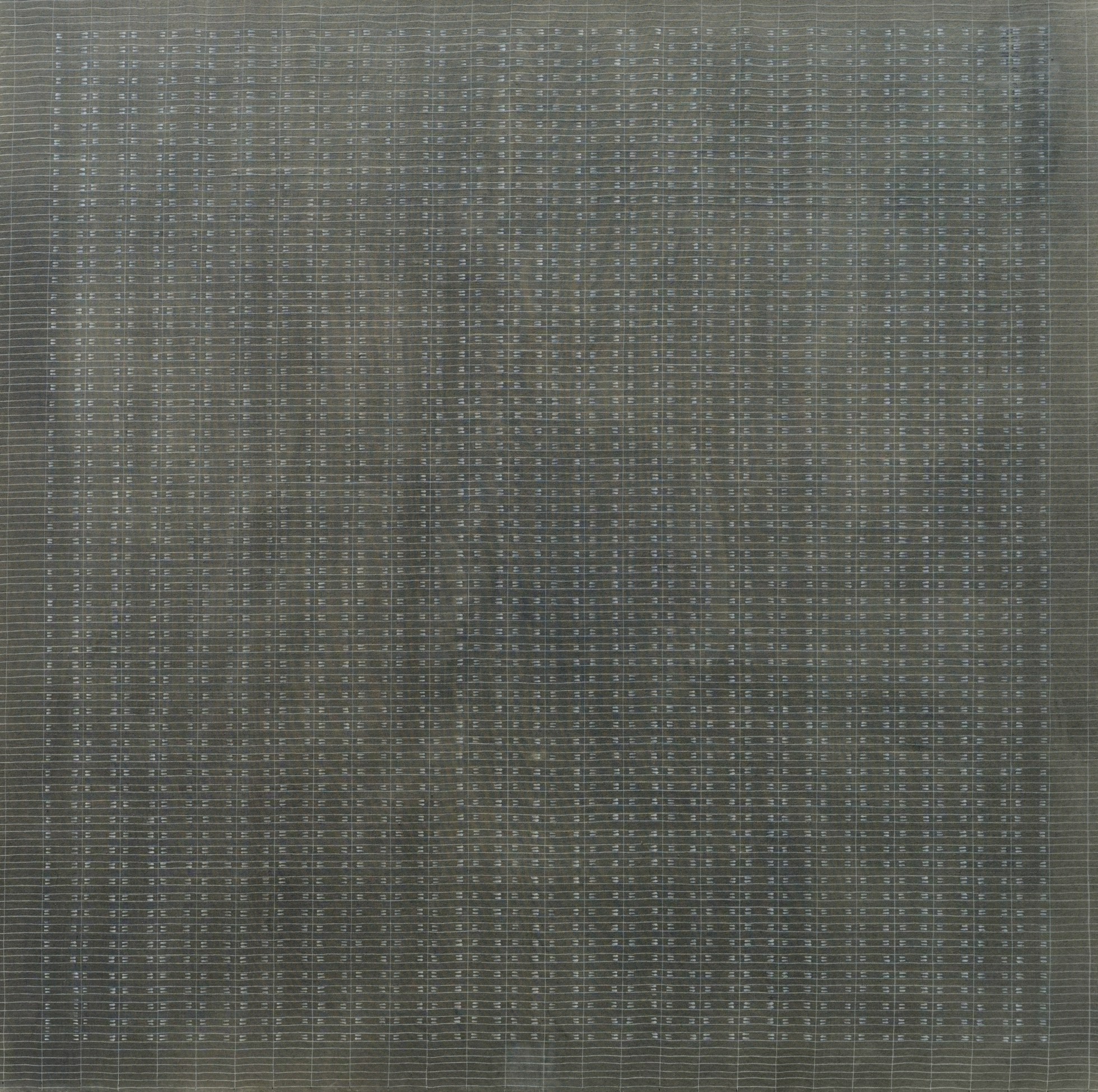

The simple grid-like designs I am creating are inspired by Agnes Martin's paintings.

Minimalist and sometimes intricate but always geometric and symmetrical, her work has been described as serene and meditative. She believed that abstract designs can illicit abstract emotions from the viewer, like happiness, love, freedom.

Code

Composition 1: "Ode to Leon's OCD" using mouseover code.

Composition 2: "Allison's Use of Vim Editor" with Kinectron code, using Shawn Van Every and Lisa Jamhoury's Kinectron app and adapting code from one of Aaron Montoya Moraga's workshops.

Composition 2 with mouseover for testing on computer.

Composition 3: "Isobel" with mouseover.

Composition 4 "Brother" with mouseover.

Composition 5. "Free Food (in the Zen Garden)"

Jitters using an array

I found some code and altered it, creating many objects that move. The code uses an array, which we haven't covered in class yet. but I wanted to make something like this for another project. Google is our friend, as they say.

Explorations of enclosures

These are exploratons of shapes for a sound object series "sound playground" project I am working on at ITP. Full project here.

The sound playground is a meditative, interactive sculpture garden that encourages play, exploration, and calm. Fascinated with human ability to remain playful throughout their lives, I want to create a unique experience that allows people of all ages to compose sound compositions by setting kinetic sculptures in motion.

In addition to play, I am examining the concept of aging and changing over timeIn addition to play, I am examining the concept of aging and changing over time. The sound-emitting kinetic objects absorb bits of the surrounding noise and insert these bits into the existing algorithmic composition, somewhat like new snippets of DNA. A sculpture's program also changes in response to how it was moved. In this way, the experiences of a sculpture is incorporated into an ever-changing song that it plays back to us, changing alongside us.

Bot That Books All The Office Hours

For my Detourning the Web final project at ITP I made a bot that books all the office hours with the instructor, Sam Lavigne. If I hadn't figured this out, I would have left one office hour booked so I could do this.

Thanks to Aaron Montoya-Moraga for help with this.

Photo courtesy of Sam Levigne

Design Fiction: A Kissing Nozzle For Your Breathing Mask

About

This is a storyboard for a design fiction video project. Design fiction is a way to speculate about the future and "stimulate discussion about the social, cultural, and ethical implications of emerging technologies."

Story

It's the year 3015. Everyone must wear breathing mask-helmets when outdoors. The ear closures are detachable so that people can whisper to one another. The mask allows drinking through a straw and one can move food into an airtight closure near the mouth. One can push a button to reduce the UV shade and make better eye contact. The mask comes with an attachment nozzle that simulates lips, stays moisturized, and sends signals to your brain from the "bionic nerve endings" on the nozzle lips.

Inspiration

The inspiration came from Dunne and Raby's "Between Reality and the Impossible".

In this project Dunne and Raby speculate that when the earth starts running out of food, a group of people - "urban foragers" - may use "synthetic biology to create 'microbial stomach bacteria;, along with electronic and mechanical devices, to maximize the nutritional value of the urban environment, making-up for any shortcomings in the commercially available but increasingly limited diet."